Fourth part, The MPTCP

There is an ongoing effort by IETF to develop standard for Multipath TCP that should overcome the aforementioned problem of having the same IP address to be able to communicate over different paths. In a nutshell what it does is creating simultaneous streams over all or just user selected available paths transparently for the application that creates the socket. Means that if your software opens a connection from x.x.x.x to y.y.y.y and x.x.x or y.y.y.y or both are multihomed then it will create more “connections” using other available paths to distribute the load. If a path disappears then MPTCP shrinks the number of streams accordingly and if new paths appear it will grow the pool. Originally started as a way to improve TCP on mobile for both bandwidth and hand-over it apparently has uses in other environments as well and it was certainly worth trying for me.

There is an ongoing effort by IETF to develop standard for Multipath TCP that should overcome the aforementioned problem of having the same IP address to be able to communicate over different paths. In a nutshell what it does is creating simultaneous streams over all or just user selected available paths transparently for the application that creates the socket. Means that if your software opens a connection from x.x.x.x to y.y.y.y and x.x.x or y.y.y.y or both are multihomed then it will create more “connections” using other available paths to distribute the load. If a path disappears then MPTCP shrinks the number of streams accordingly and if new paths appear it will grow the pool. Originally started as a way to improve TCP on mobile for both bandwidth and hand-over it apparently has uses in other environments as well and it was certainly worth trying for me.

Naturally, any MPTCP implementation would require changes and additions to the original stack so there is the Linux kernel MultiPath TCP project that offers just that. To understand better how and why it is there you can start by watching Christoph Paasch presenting it @Google Techtalk.

In my specific case what can be the advantages of using it as a substitution of already functional VPN bonding?

- It is less cumbersome than tunnels and bonds. Drop it in, configure multipath routing and you are good to go.

- It has smaller overhead, as far as I can observe, only 8 bytes per packet

- It was created to provide just what I need (albeit for a different purpose) so it solve all kinds of problems with timeouts and connection drops at the core

- compared to bonding, it monitors the TCP flow and not the physical link to detect the changes

- It gives you all the bandwidth you can get from every route and doesn’t limit to the slowest as the bonding does

Certainly, all can’t be good and I have to deal with limitations:

- It is Multipath TCP, so UDP, ICMP, or anything fancier than that are at their own

- It is a change to the stack, so if a node doesn’t support it, it doesn’t have it. It will be years until other OSes start to support it and as it usually happens there will be various incompatible interpretations for some time

- Starting at the previous, the implementation is complicated enough to be better off with the kernel’s version the project provides

So, what can I actually do with MPTCP to improve the situation? Apparently I can’t use it on every single node within my LAN. I have all kind of systems here, different OSes, kernels and such. I can’t even run it on my working computer, Fedora 18 is now at 3.8 already and no way I am going to downgrade. What I can actually do, is to install the MPTCP kernel on one local node and the remote end. Then what I can try is to bridge somehow between them. I’ve installed the MPTCP kernel on a remote server and an old notebook locally and played with it for some time. Here is what I’ve tried and found useful.

First I’ve tried to use MPTCP to remove the bonding. Means I tried to run TCP over TCP again. Configured the openvpn normally, just as my initial configuration minus the encryption, it did work, openvpn was using both routes for a single instance, but soon enough the sessions within the tunnel started to hang and the throughput within a single session was jumpy at best. I’ve assumed it was because of the same retransmissions and congestion problems as without MPTCP and moved forward to the next idea I had.

Given I still wanted my ICMP and UDP to take advantage of multipath, I couldn’t remove the bonded VPN, so I left it where it was doing its job and though for a moment how I can proxy the TCP connection from the LAN to the remote server so every single connection is converted into a MPTCP capable. Theoretically it could be possible to extend the Linux NAT functionality but I wasn’t able to find anything even close to it. The only way I’ve found was to use a transparent “SOCKSifier” (SOCKS also supports UDP) on the local node that would redirect TCP traffic to the remote side’s SOCKS server. I’ve installed redsocks on the local node, dante on the remote side, configured redsocks to use remote dante as the destination proxy, added the needed NAT table rules and it was working. Both redsocks and dante were using MPTCP just fine and any TCP connection that passed through them was getting the advantages of two lines. The bandwidth though was comparable to that of the bonded VPN, maybe 1-2% more than on VPNs. From the traffic dumps I’ve seen that SOCKSv5 has a lesser overhead than openvpn but it does have some. Probably that was the reason. I did not dig deeper because I didn’t like how the software behave, too many open connections, too many interrupts. It didn’t seem stable. A couple of days and a dozen of segmentation faults later, I’ve decided to drop it and come back to it when I’ll have some time to spare.

Given I still wanted my ICMP and UDP to take advantage of multipath, I couldn’t remove the bonded VPN, so I left it where it was doing its job and though for a moment how I can proxy the TCP connection from the LAN to the remote server so every single connection is converted into a MPTCP capable. Theoretically it could be possible to extend the Linux NAT functionality but I wasn’t able to find anything even close to it. The only way I’ve found was to use a transparent “SOCKSifier” (SOCKS also supports UDP) on the local node that would redirect TCP traffic to the remote side’s SOCKS server. I’ve installed redsocks on the local node, dante on the remote side, configured redsocks to use remote dante as the destination proxy, added the needed NAT table rules and it was working. Both redsocks and dante were using MPTCP just fine and any TCP connection that passed through them was getting the advantages of two lines. The bandwidth though was comparable to that of the bonded VPN, maybe 1-2% more than on VPNs. From the traffic dumps I’ve seen that SOCKSv5 has a lesser overhead than openvpn but it does have some. Probably that was the reason. I did not dig deeper because I didn’t like how the software behave, too many open connections, too many interrupts. It didn’t seem stable. A couple of days and a dozen of segmentation faults later, I’ve decided to drop it and come back to it when I’ll have some time to spare.

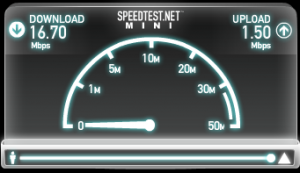

Finally, I came to a compromise between the free time I have to spend for the project and my greed for the bandwidth. I’ve decided that I only needed maximum bandwidth for two specific cases. First and apparently the most important is to screenshot the speedtest results and give a boost to my ego and the other one was occasional download or upload of files to/from cloud storages and CDNs. The rest of my needs can be satisfied with reliability the bonded VPNs provide. So given both of the bandwidth needs were using HTTP (well, also HTTPS but less), I’ve decided to try just that. Use two HTTP proxies with MPTCP and leave the rest to the VPNs.

Finally, I came to a compromise between the free time I have to spend for the project and my greed for the bandwidth. I’ve decided that I only needed maximum bandwidth for two specific cases. First and apparently the most important is to screenshot the speedtest results and give a boost to my ego and the other one was occasional download or upload of files to/from cloud storages and CDNs. The rest of my needs can be satisfied with reliability the bonded VPNs provide. So given both of the bandwidth needs were using HTTP (well, also HTTPS but less), I’ve decided to try just that. Use two HTTP proxies with MPTCP and leave the rest to the VPNs.

I’ve installed two squids on local and remote ends, configured the local to use the remote as parent:

and made it transparent:

this gave me the results I am satisfied with at the moment. All the HTTP traffic has gained 1.9x boost in speed and the rest of the traffic 1.5x boost plus the redundancy.

Below are the results of 100MB data transfer in various combinations:

Uploads

iperf on local node with default options sending to a node located on the same switch with remote over ADSL1

Local:

TCP window size: 22.3 KByte (default)

local 192.168.230.253 port 8000 connected with x.x.x.y port 8000

0.0-1067.1 sec 100 MBytes 786 Kbits/sec

Remote:

0.0-1068.4 sec 100 MBytes 785 Kbits/sec

iperf on local node with default options sending to a node located on the same switch with remote over ADSL2

Local:

Binding to local address 192.168.232.253

TCP window size: 22.6 KByte (default)

local 192.168.232.253 port 8000 connected with x.x.x.y port 8000

0.0-1124.1 sec 100 MBytes 746 Kbits/sec

Remote:

0.0-1125.8 sec 100 MBytes 745 Kbits/sec

iperf on local node sending to the remote node using MPTCP

Local:

TCP window size: 44.6 KByte (default)

local 192.168.230.253 port 41717 connected with x.x.x.x port 5000

0.0- 5.1 sec 1.00 MBytes 1.65 Mbits/sec

Downloads

iperf with default options on a node located on the same switch with remote sending to the the public IP of the ADSL1 forwarded to the local node

Remote:

TCP window size: 16.0 KByte (default)

local x.x.x.y port 52744 connected with y.y.y.y port 8000

0.0-99.7 sec 100 MBytes 8.41 Mbits/sec

Local:

iperf with default options on a node located on the same switch with remote sending to the the public IP of the ADSL2 forwarded to the local node

Remote:

TCP window size: 16.0 KByte (default)

local x.x.x.y port 41700 connected with y.y.y.y port 8000

0.0-95.8 sec 100 MBytes 8.76 Mbits/sec

Local:

0.0-96.3 sec 100 MBytes 8.71 Mbits/sec

local node wgetting from remote node using MPTCP, can’t do that with iperf.

16.16 Mbits/sec

The only things left for me to talk about are some minor problems and gotchas I’ve found.